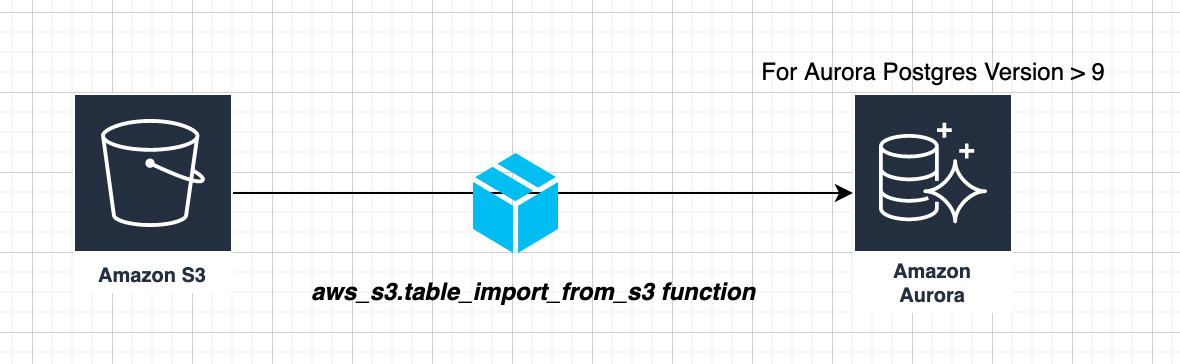

Scenario 1: To copy data from S3 to Aurora Postgres (greater than v9 or latest)

How?: We can use aws_s3.table_import_from_s3 function (to migrate the data from S3 to Aurora Postgres).

Steps:

A sample file with columns - id, prefix, mstr_id is copied to S3.

Create schema on Aurora Postgres (with the required columns).

drop table core.mstr; CREATE TABLE core.mstr ( id varchar(300) NULL, prefix varchar(300) NULL, mstr_id float8 NULL );

Copy command to transfer the data from S3 to Aurora Postgres

SELECT aws_s3.table_import_from_s3( 'core.MSTR', 'id,prefix,mstr_id', '(format csv, header true)', '<bucket-name>', 'MSTR_DATA/part_file_00.csv', 'us-east-2', '<secret key>', '<access key>' );

Note: If IAM roles are given, we need not specify access keys.

SELECT aws_s3.table_import_from_s3( 'core.MSTR', 'id,prefix,mstr_id', '(format csv, header true)', '<bucket-name>', 'MSTR_DATA/part_file_00.csv', 'us-east-2' );

Note: aws_s3.table_import_from_s3 function is not supported for versions earlier than Aurora Postgres Version 9.

Scenario 2: To copy data from S3 to Aurora Postgres (older versions than 9)

How? : Use SQOOP Job to export the data from S3 to Aurora Postgres.

Steps:

Upload data files to s3.

Create schema on Aurora Postgres (with the required columns).

drop table core.mstr; CREATE TABLE core.mstr ( id varchar(300) NULL, prefix varchar(300) NULL, mstr_id float8 NULL );

- Must have access to S3 and Aurora Postgres

- Check for connectivity:

- Whether EMR cluster and RDS reside in same VPC

- dig <Aurora hostname>

- nc -vz <hostname> (must get a message: connectivity looks good)

- Make sure the security groups are properly assigned to the EMR Cluster.

- The security group must ALLOW traffic from CORE node of EMR Cluster

- Must be added to the RDS Security Group as a source

echo "Exporting MSTR table from S3 to Postgres"

echo "Sqoop Job in Progress"

sqoop export

--connect <jdbc URL>

--input-null-string NULL

--input-null-non-string NULL

--table mstr

--export-dir s3://<bucket_name>/MSTR/

--username user_etl

--P

-- --schema testecho "Sqoop Job is executed successfully"

echo "Data will now be available on Aurora Postgres"

Comments

Post a Comment